Vision Applications using Vision Transformers

In this article, we will be looking into High/mid-level vision applications using vision transformers and compare them with CNNs.Specifically, we will be discussing the performance of vision transformers in object detection, segmentation, and Pose estimation and various applications like pedestrian detection, lane detection, etc...

Object Detection:

The usage of transformers in vision applications has gained significant traction as they are capable of outperforming CNNs which is proven true to be. Object detection models introduced using transformers have enhanced various modules like feature modules and prediction heads. Object detections using transformers are of 2 types:

1) transformer-based set prediction

2) transformer-based backbone methods.

General framework of transformer-based object detection

Transformers have outperformed CNNs in detection applications in terms of accuracy and faster performance.

Transformer-based set prediction for detection:

It treats the detection problem as a set prediction problem. Once classical exampled is DETR(detection transformer). DETR eliminates habitual manual components like anchor generation and non-max suppression(NMS) post-processing. DETR makes use of CNNs as its backbone for feature extraction from images. In addition, fixed positional encodings are added to features extracted(flattened) before they are fed to the encoder-decoder transformer. The, then decoder uses encoder embeddings to produce output embeddings. Then feed-forwards network is used to predict the final position of the object. predictions include positional bounding boxes and class labels. Unlike traditional transformer which performs sequential predictions, DETR performs parallel decoding at the decoder level. DETR uses a bipartite matching algorithm, and Hungarian loss is used to calculate the loss function for matched object pairs.DETR has performed on par with faster RCNN in object detection.

The overall architecture of DETR

While there is nothing in this universe with just positives, DETR has several challenges too like being less effective for small objects and a long training period. To solve this, Deformable DETR has been introduced. Its attention module uses certain reference points to attend to instead of spatial locations on feature maps of images as used by traditional multi-head attention mechanisms. This step drastically reduces the computational cost(by 10x) and also increases inference speed(by 1.6x). Further usage of the iterative bounding box refinement method and two-stage scheme can enhance performance. Various other improvements of DETR have been proposed i.e., Spaciallu modulated Co-attention(SMCA) module for achieving similar mAP in less training time. An adaptive Clustering Transformer is introduced to reduce the computation cost of pre-trained DETR. Using multi-task knowledge distillation (MTKD) can improve performance derop. Efficient DETR has been proposed for better initialization to achieve similar performance using the more compact network.

Transformer-Based Backbone for Detection:

As in the name, it uses a transformer as a backbone for detection framework like faster R-CNN. The input image is clustered into several patches and given to the vision transformer. Using a pre-trained transformer can be more effective than ViT-FRCNN. Using various designs of vision transformers could be effective too. Swin Transformer is a classic example.

Pre-training for transformer-based object detection:

Similar to a pre-training transformer for NLP applications, a pre-training transformer for object detection has been proposed. An unsupervised protect task, random query patch detection is used to pre-train the DETR model named UP-DETR.On the COCO dataset, UP-DETR outperforms DETR. Various pre-trained transformers have been proposed as the backbone, like trained ViT on the Imaggenet dataset as a YOLOS detector. In this classification tokens in ViT are dropped and learnable detection tokens are added.

Segmentation:

It is broadly categorized into panoptic segmentation, instance segmentation, and semantic segmentation. let’s look into 3 of them in detail.

Transformer for panoptic segmentation:

DETR, upon attaching the mask head to the decoder will prove as a good model for panoptic segmentation. Max-Deeplab directly segments using a mask transformer, without subtasks like box detection. Same as DETR, Max-Deeplab directly predicts non-overlapping masks and respective labels. It uses a dual-path framework combining CNN and transformer.

Transformer for Instance segmentation:

The VisTR video instance segmentation model is used to predict instances from a sequence of images. It uses an instance segmentation segmentation module to collect mask features from a sequence of frames and segment mask sequences with 3D CNN. Various other models like ISTR have been proposed.

Transformer for semantic segmentation:

Semantic segmentation network(SETR) uses a similar encoder architecture of ViT, and a multi-level feature collecting module is used for pixel-wise segmentation. Segmenter uses output embeddings of image patches and gets class labels with a point-wise linear decoder or mask transformer decoder.

Illustration SETR

Pose Estimation:

Hand and human pose estimation are foundational topics that gained significant popularity among the research community. Let’s discuss both of them in brief

Transformer for Hand-Pose Estimation:

Various transformers have been proposed to estimate hand pose. The architecture includes encoding using Point-Net to extract point-wise features and then produces output embeddings as standard. Further Point-Net++ is used for more feature extraction. Similar transformers like HOT-Net and various models for estimation of joint hand pose given in a single image have been proposed. Specifically, 2D locations were given as input and 3D pose estimates were outputted.

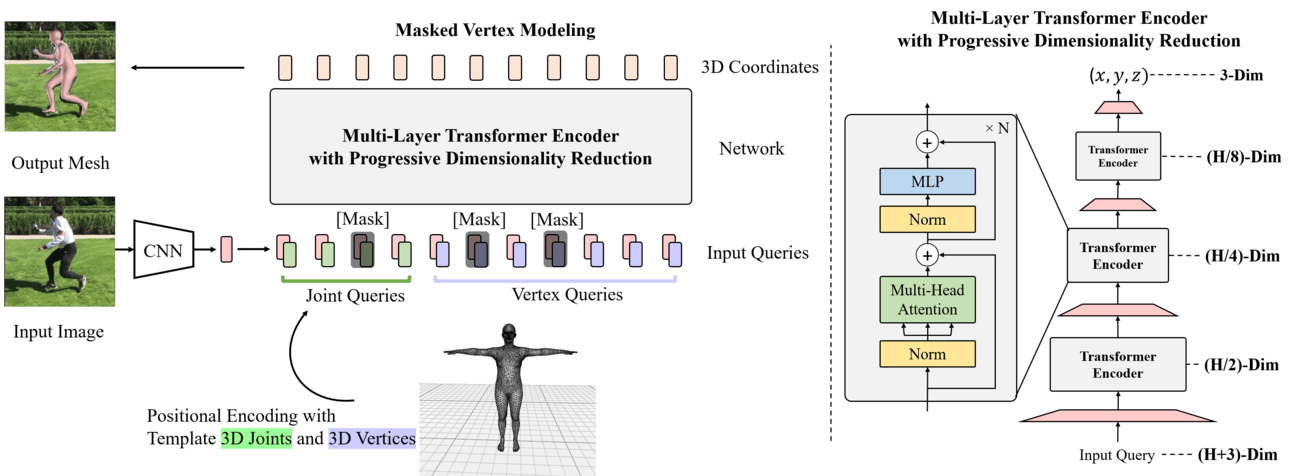

Transformer for Human Pose Estimation:

METRO transformers have been proposed for estimating 3D human pose and mesh from a single image.CNN does feature extraction and then position encoding. METRO randomly masks input queries to learn non-local relations. Multi-layer transformer with progressive dimensionality reduction to reduce embedding dimensions has been proposed. Transpose with transformer architecture proposed to learn long-range spatial relations between key points has been proposed. Another TokenPose has been introduced where key points are embedded as tokens to learn certain relationships. Another way of prediction is converting the pose estimation task into a sequence prediction problem and then solving it using a transformer. Another model has proposed a personalized prediction system that uses test images of a person without any annotations explores person-specific information and then uses a transformer estimate pose.

Overview of METRO framework

Transformers for other tasks.

Pedestrian Detection:

In pedestrian detection, there may be focused objects that can be hard to estimate with generalized DETR or Deformable DETR for such use cases. To counter this, a Pedestrian End-to-end detector has been introduced which uses new Dense Queries and Rectified Attention field(DQRF) to support dense queries. Another V-Match makes full use of visual annotations for performance upgrades.

Lane Detection:

Inspired by PolyLaneNet, novel LSTR has been proposed to improve the performance curves by leveraging the ability of the transformer to learn global relationships and CNN to extract low-level features.LSTR considers lane detection as the task of fitting lanes with polynomials and uses neural networks to predict parameters. It uses Hungarian loss for optimization. A combination of the transformer, CNN, and Hungarian loss makes LSTR precise, fast, and tiny. Utilizing the truth that the lane is elongated in shape long-range, a new transformer encoder structure for efficient contextual and global feature extraction has been proposed specifically when the backbone is a small model.

Tracking:

Various transformers like TMT, TrTr, and TransT have been proposed for traching. All of these use siamese-like tracking for video object tracking using encoder-decoder networks for global and rich contextual relations. Another TransTrack, a joint-detection and tracking pipeline has been proposed utilizing a query-key mechanism to track already present pre-existing objects and adds a set of learned object queries to detect new-coming objects. TransTrack achieves 74.5% and 74.5% MOTA on MOT17 and MOT 20 benchmarks.

Conclusion:

Transformers have performed well in several high-level tasks including detection, segmentation, and pose estimation. Key issues include issues regarding input embedding, position-encoding, and prediction loss. While proposals are made to improve self-attention from different perspectives i.e., deformable attention, adaptive clustering, and point transformer we are yet to explore the full potential of transformers and their architecture for high-level vision applications. There are queries about CNNS being used before the transformer. Nevertheless, we are still in the initial stages of explosive transformer performance testing which is yet to be unfolded.

Thanks for reading...